Clean Architecture: Implementing testing patterns

When we are writing an application using Clean Architecture standards, we are setting up our application for easy testing. There are different testing patterns to apply, and each have their own boundaries within the Clean Architecture design.

As a developer, we want to have multiple layers of tests available. We should be testing very specific code with an unit test, but also test how different components interact with each other in integration tests. But what if we also want to verify that our external services are properly operating and interacting with our code? Let's add system testing to our list to cover this as well. Finally, we might want to confirm our deployed is working as expected, so we are adding acceptance tests to test the complete application chain across services.

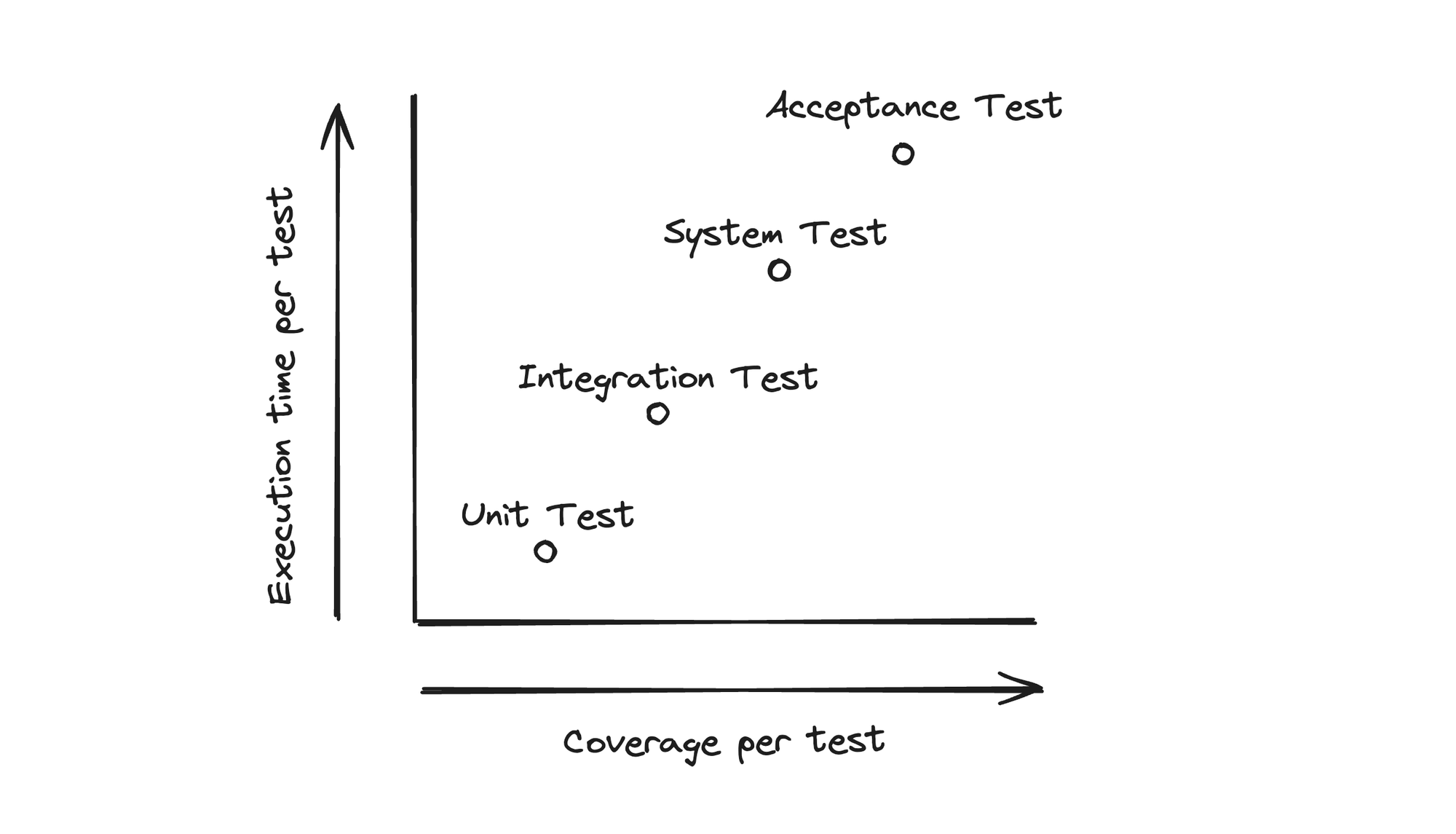

As you can see, we have already introduced 4 different methods to test our application. Let's compare how execution time (how fast the test is completed) and coverage gained from these testing methods.

Besides the execution time increasing, the testing complexity also increases. While a unit test has you test the input and output of a single function, end-to-end tests need to be able to deal with a lot of complexity from dependencies and other code that can effect test execution.

Understanding where boundaries lay and differ between the different testing methods, allow us to better define and prepare for writing more complex tests. To help us manage complexity in each step, we also make use of mocking to scope our tests to the right size. Let's go through each method.

Unit tests

Unit tests are our smaller tests, which focus on testing a single function or piece of code. When we talk about single functions, I always try to focus on the public methods of a class.

Private methods are called from the public methods within the class, so most of the time they will be tested as part of the public function and therefore do not require their own tests.

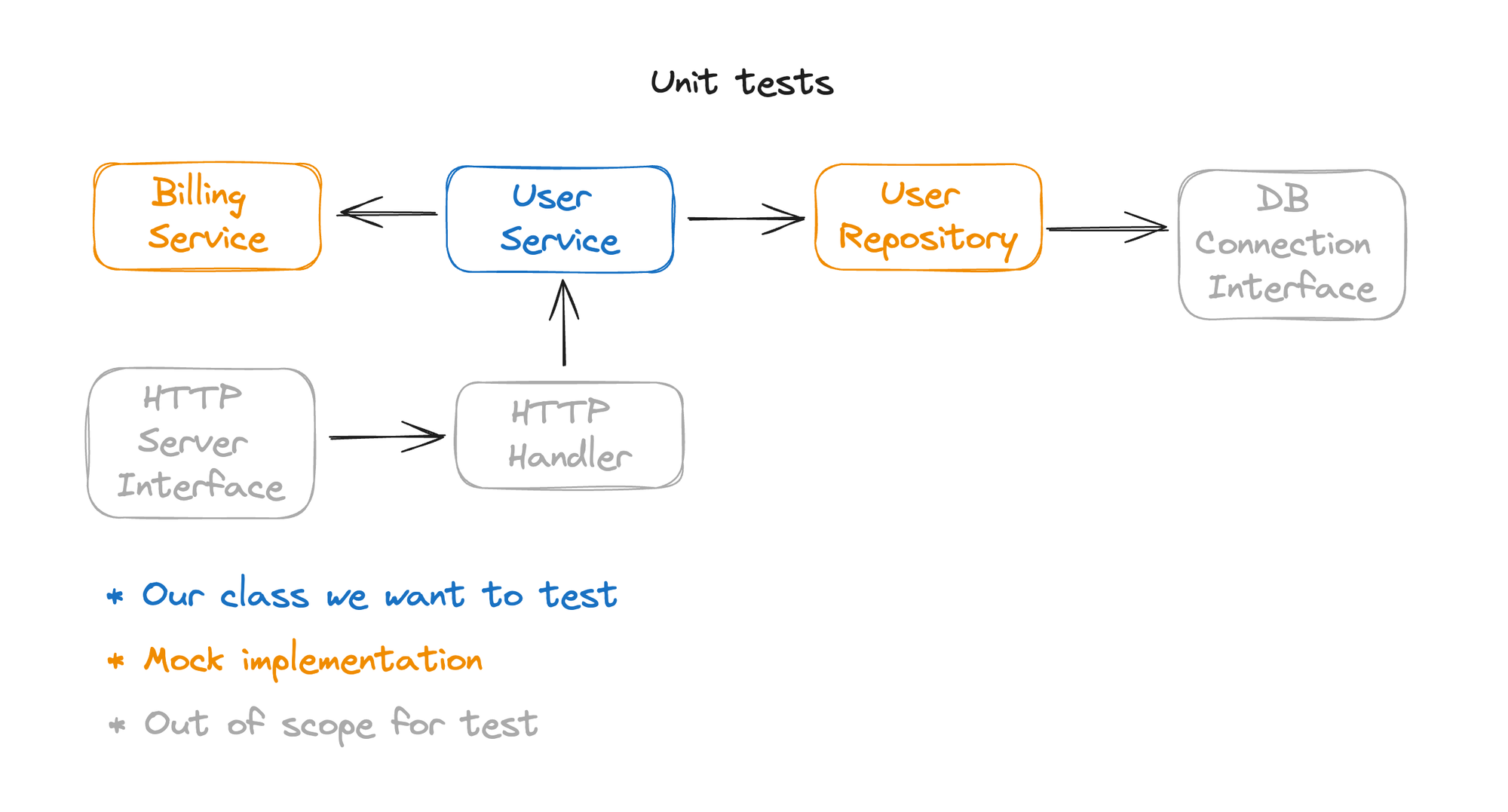

Let's look at the unit testing structure for the user service methods in this diagram:

As you can see in this diagram, we are mocking the dependencies of the user services, and everything that is further away. On the other hand, anything that integrates the class is also out of scope for a unit test. Our mocks should return different results, such as an user repository throwing different exceptions and make sure the User Service methods act accordingly to the different mock return values.

Using Interface Segregation on our services, and mapping out implementations of the interface to our infrastructure layer, it becomes very easy to mock out any dependency of our service.

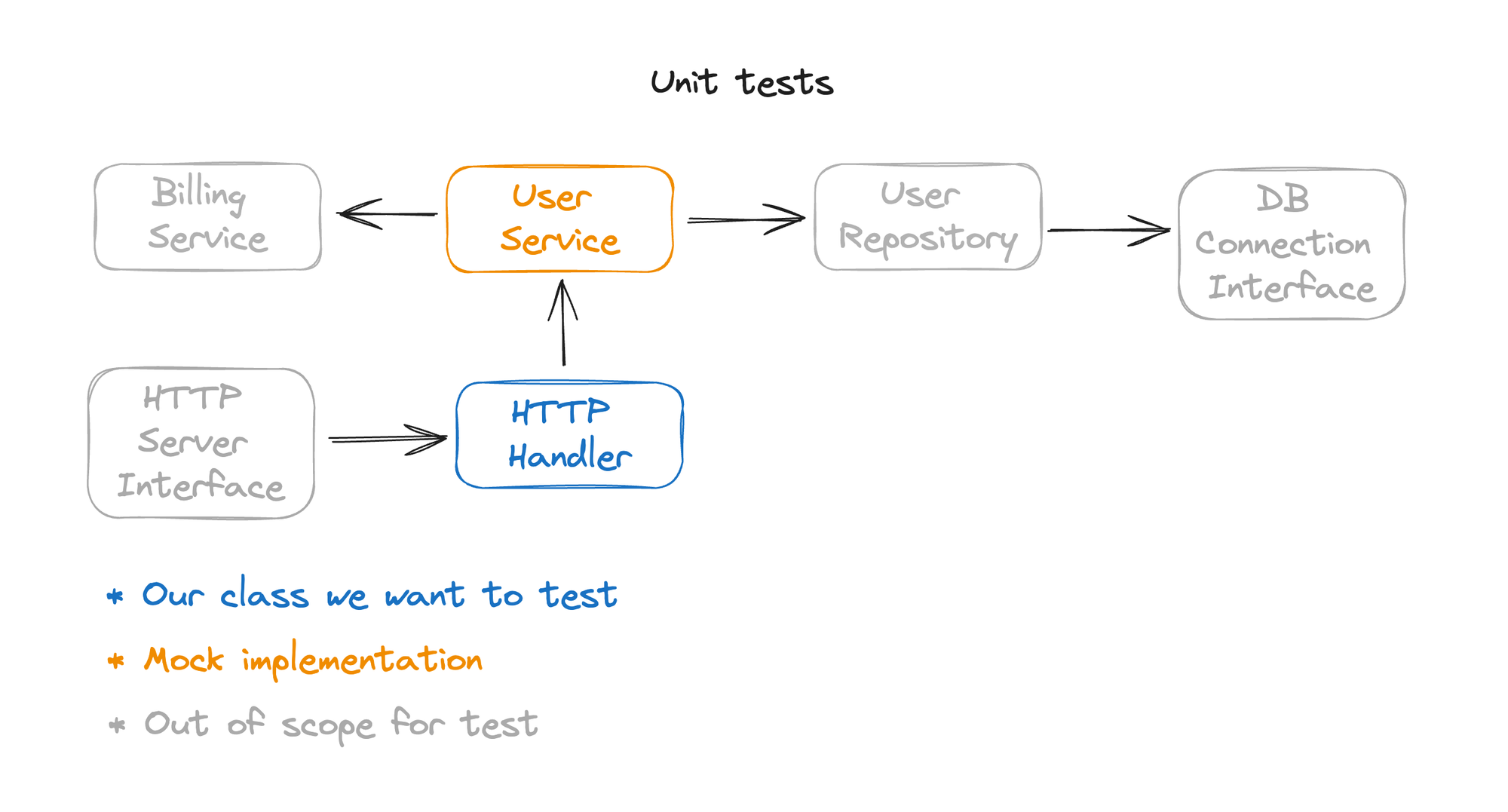

If we would move want to unit test our HTTP handler instead, our diagram would look like this:

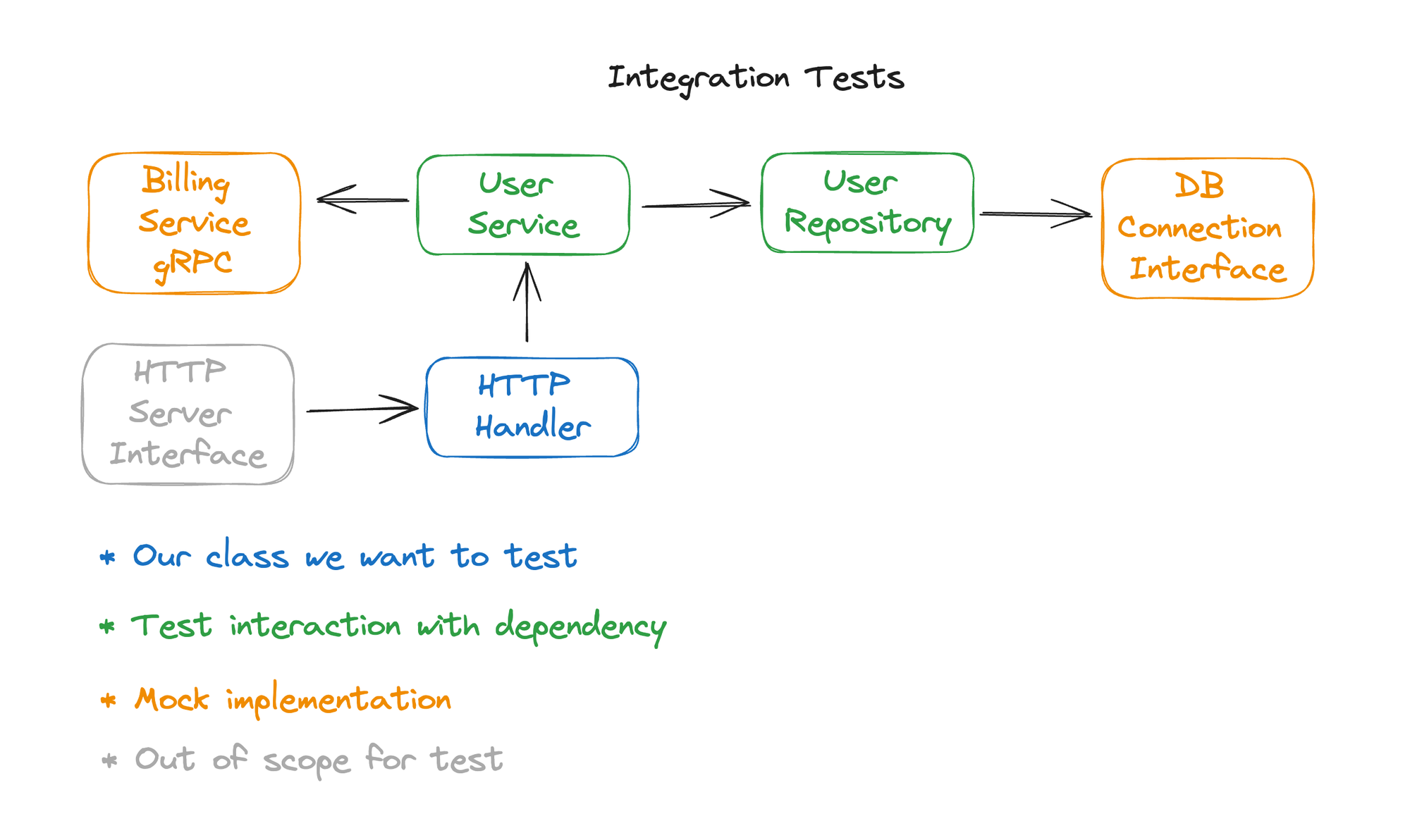

Integration tests

In our unit tests, we already mentioned that we put anything that integrates with our component out of scope. Here is where integration tests come in, as they are making sure that while our separate "units" work properly, the interaction between these units is correctly set up.

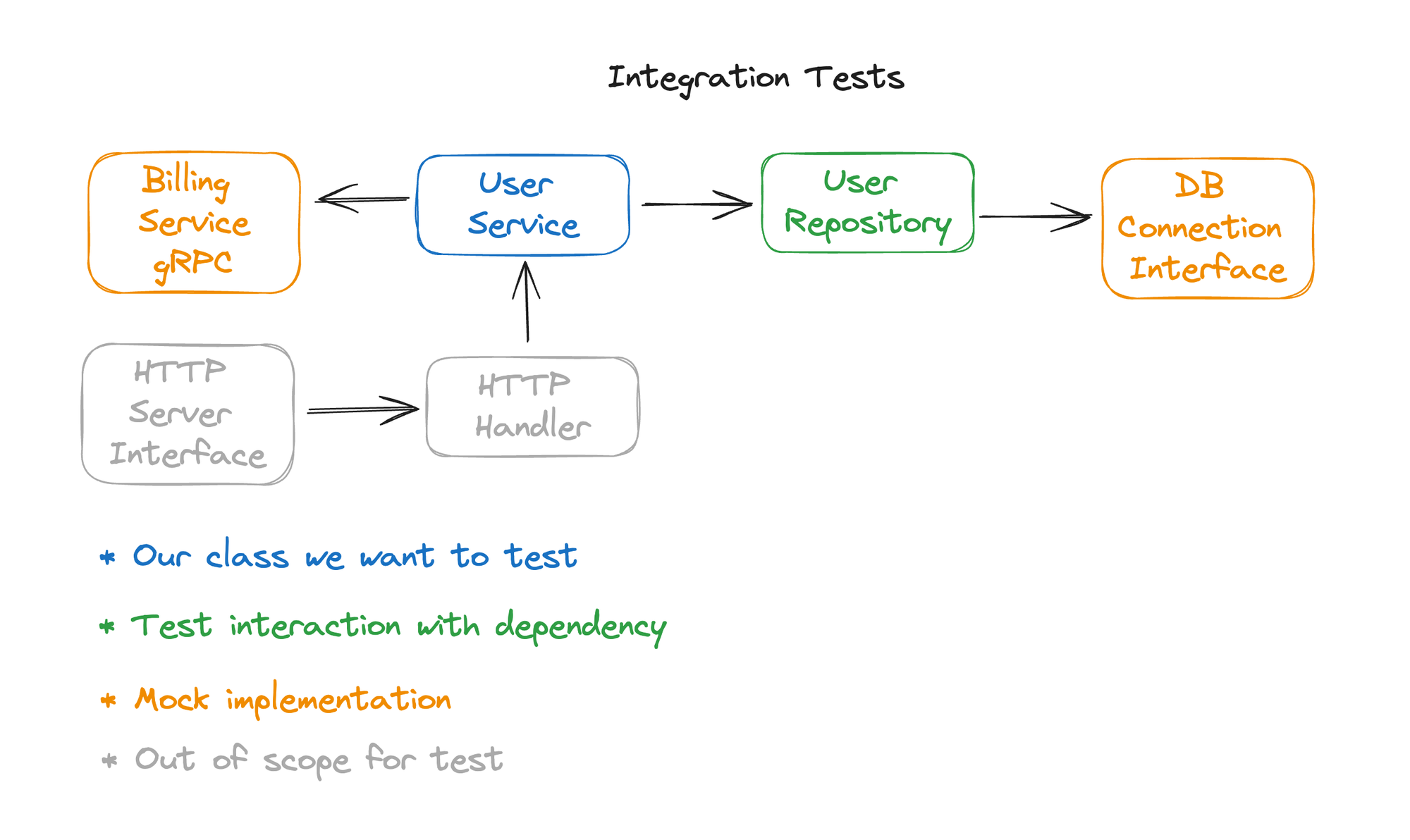

Let's grab our diagram again and update it for running an integration test against the User Service.

In this example, we are testing our integrations with other components, until we reach the point where we need to depend on other services. Our billing service is a different microservice, so we mock it. If we would not, we would have the requirement of a running gRPC backend to run this test. The same is for our DB connection interface, we want to be able to run the integration test without needing to up an database.

You could mock this for example by swapping to a SQLite connector allowing the mock to write to a file (or just use memory). We do not need to separately test our Repository, as the code will also be (partially) covered from our integration tests.

The next step is to move our integration test to the entrypoints of our service, the HTTP handler:

Our integration test now covers all units that are part of this services, without requiring other services to be available as these are mocked out. If there are any hard to test functions or decision tree's in any of our dependencies, we can utilize the unit tests to test these more complex scenario's.

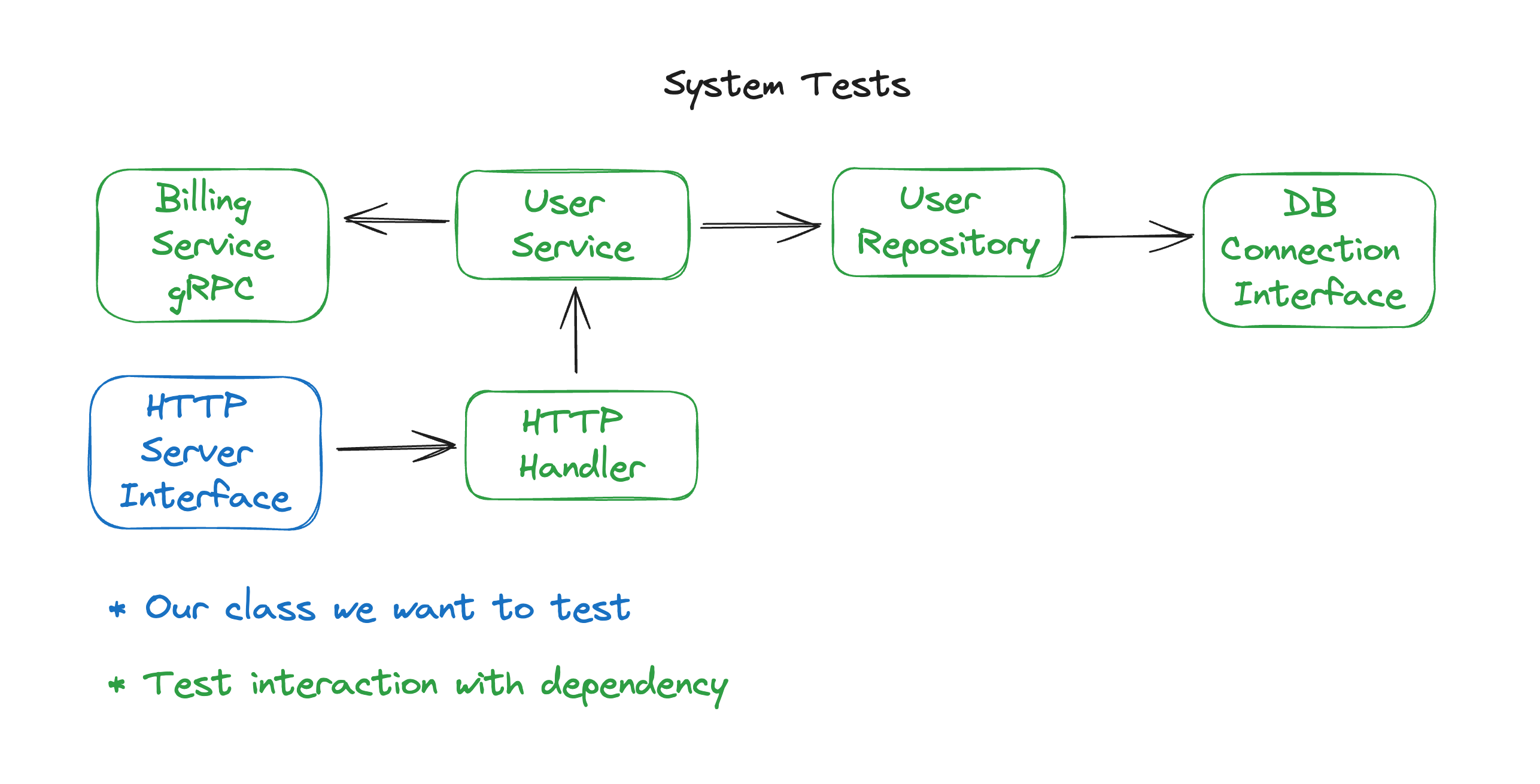

System tests

Once we have completed the integration test stage, we can look how our system is performing and interacting as a whole. To test our complete system we need to have our external dependencies running too. Our database instance, other microservices or even external APIs (but more on this later).

Let's revisit our diagram again and see how it looks for system testing our service:

Perfect, we have tested our full system (we are still zoomed in in our broader application) on interaction with the user service. Our system tests can handle different scenario's with both correctly handling business logic but also validation errors on invalid input data.

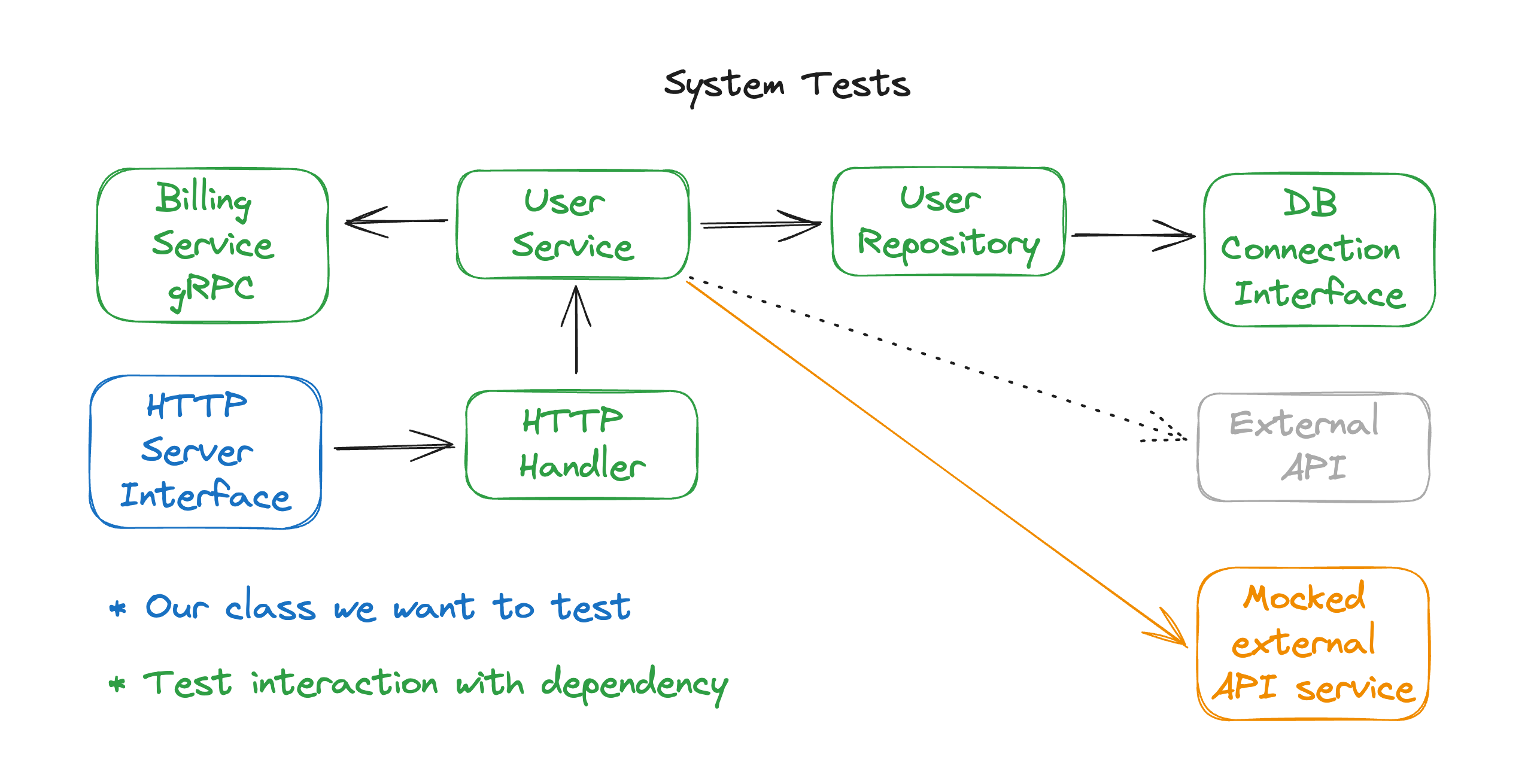

External APIs

But what if we are also working with an external API? Should our system test fail when the external API is down? Or what about when the external API changes?

The answer is Yes. If you depend on the external API for confirming your system tests pass and it changes or is unavailable, your code should not be able to move further in the CI/CD process until this has been resolved, or you are introducing potential risks in your rollout to production.

But what if your external API is too complex to keep running tests against?

The answer: mock servers.

Mock servers differ from mocked interfaces in your code, as they are an actual deployed service separate from your application. Your application needs to execute a network request in a similar way as it would against the actual External API.

Your mock server can be build based on the API specification from the External API (e.g. GraphQL schema or OpenAPI spec), and should validate proper Headers/Input being send from your service as expected.

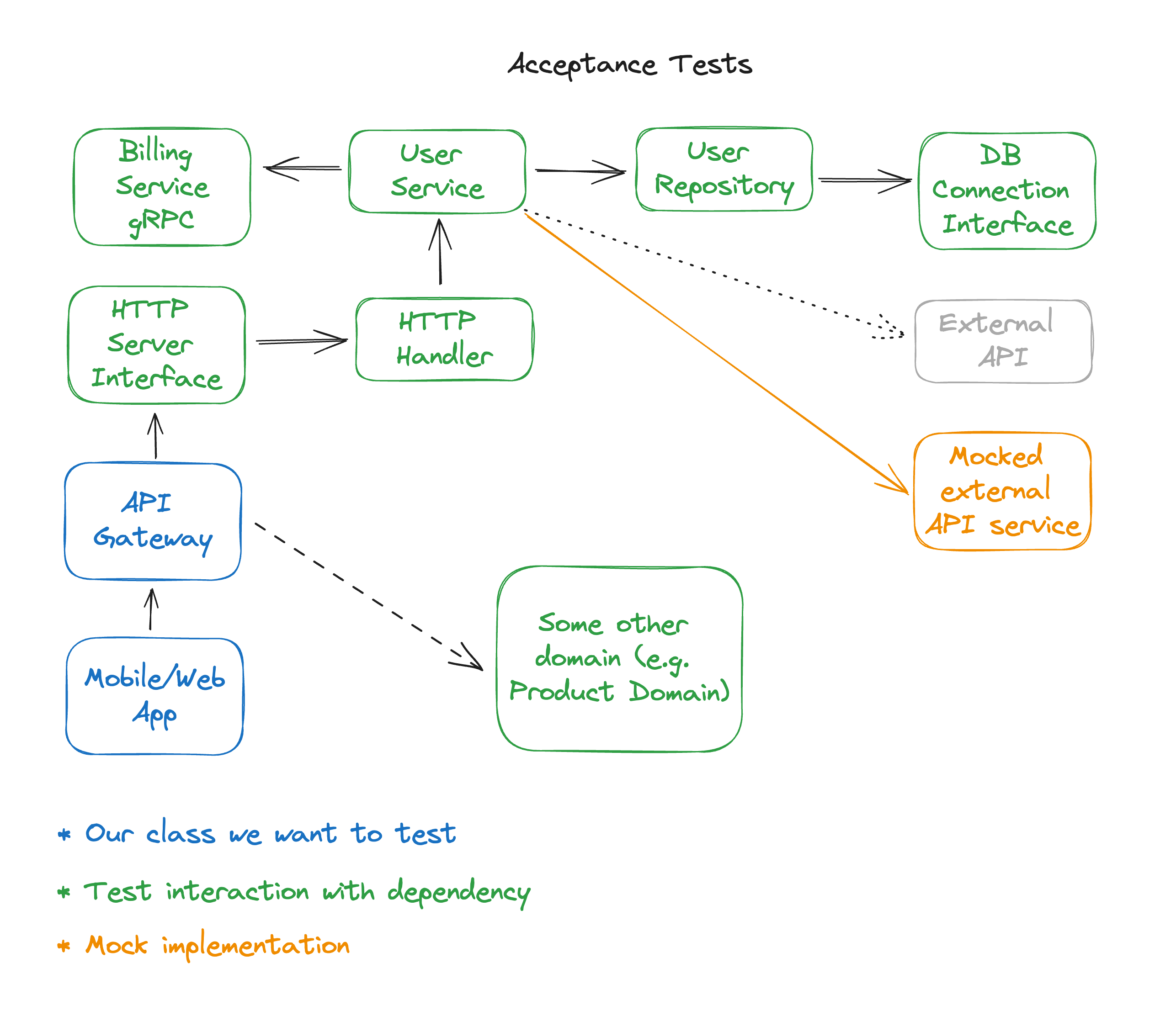

Acceptance tests

The final testing method in this article are acceptance tests. Acceptance tests utilize end-to-end testing techniques to validate if the business logic matches the expectation of the desired end-user, in agile also known as the Acceptance Criteria.

Acceptance tests can be done by hand by different types of users, but we can also automate the expected behaviour and outcomes using tools such as Cypress. When we are looking at acceptance testing, we no longer want to limit our tests to testing our API endpoints or system, but also the actual user behaviour. Let's update our diagram to add a front-end UI component as well:

It's important that our tests can run without impacting future runs and ideally a clean environment is loaded and seeded that is identical for every new test run.

If our external API is still complex and not suited for being cleaned or seeded before a test, we still use a mock service at this stage.

Closing words

I hope this article has given a better and clearer understanding on how different testing methods can be applied in your application. How every tests impacts different elements of your system design and what components are irrelevant for every test.

Finally I want to mention that including meaningful tests goes beyond achieving a certain coverage threshold. Tests should complement your complex logic, and most of the simpler parts of your application do not require a unit test, but a higher level test should be sufficient.